Keyword Extraction with BERT

When we want to understand key information from specific documents, we typically turn towards keyword extraction. Keyword extraction is the automated process of extracting the words and phrases that are most relevant to an input text.

With methods such as Rake and YAKE! we already have easy-to-use packages that can be used to extract keywords and keyphrases. However, these models typically work based on the statistical properties of a text and not so much on semantic similarity.

In comes BERT. BERT is a bi-directional transformer model that allows us to transform phrases and documents to vectors that capture their meaning.

What if we were to use BERT instead of statistical models?

Although there are many great papers and solutions out there that use BERT-embeddings (e.g., 1, 2, 3, ), I could not find a simple and easy-to-use BERT-based solution. Instead, I decide to create KeyBERT a minimal and easy-to-use keyword extraction technique that leverages BERT embeddings.

Now, the main topic of this article will not be the use of KeyBERT but a tutorial on how to use BERT to create your own keyword extraction model.

1. Data

For this tutorial, we are going to be using a document about supervised machine learning:

doc = """

Supervised learning is the machine learning task of

learning a function that maps an input to an output based

on example input-output pairs.[1] It infers a function

from labeled training data consisting of a set of

training examples.[2] In supervised learning, each

example is a pair consisting of an input object

(typically a vector) and a desired output value (also

called the supervisory signal). A supervised learning

algorithm analyzes the training data and produces an

inferred function, which can be used for mapping new

examples. An optimal scenario will allow for the algorithm

to correctly determine the class labels for unseen

instances. This requires the learning algorithm to

generalize from the training data to unseen situations

in a 'reasonable' way (see inductive bias).

"""

I believe that using a document about a topic that the readers know quite a bit about helps you understand if the resulting keyphrases are of quality.

2. Candidate Keywords/Keyphrases

We start by creating a list of candidate keywords or keyphrases from a document. Although many focus on noun phrases, we are going to keep it simple by using Scikit-Learns CountVectorizer. This allows us to specify the length of the keywords and make them into keyphrases. It also is a nice method for quickly removing stop words.

from sklearn.feature_extraction.text import CountVectorizer

n_gram_range = (1, 1)

stop_words = "english"

# Extract candidate words/phrases

count = CountVectorizer(ngram_range=n_gram_range, stop_words=stop_words).fit([doc])

candidates = count.get_feature_names()

We can use n_gram_range to change the size of the resulting candidates. For example, if we would set it to (3, 3) then the resulting candidates would phrases that include 3 keywords.

Then, the variable candidates is simply a list of strings that includes our candidate keywords/keyphrases.

NOTE: You can play around with n_gram_range to create different lengths of keyphrases. Then, you might not want to remove stop_words as they can tie longer keyphrases together.

3. Embeddings

Next, we convert both the document as well as the candidate keywords/keyphrases to numerical data. We use BERT for this purpose as it has shown great results for both similarity- and paraphrasing tasks.

There are many methods for generating the BERT embeddings, such as Flair, Hugginface Transformers, and now even spaCy with their 3.0 release! However, I prefer to use the sentence-transformers package as it allows me to quickly create high-quality embeddings that work quite well for sentence- and document-level embeddings.

We install the package with pip install sentence-transformers. If you run into issues installing this package, then it might be helpful to install Pytorch first.

Now, we are going to run the following code to transform our document and candidates into vectors:

from sentence_transformers import SentenceTransformer

model = SentenceTransformer('distilbert-base-nli-mean-tokens')

doc_embedding = model.encode([doc])

candidate_embeddings = model.encode(candidates)

We are Distilbert as it has shown great performance in similarity tasks, which is what we are aiming for with keyword/keyphrase extraction!

Since transformer models have a token limit, you might run into some errors when inputting large documents. In that case, you could consider splitting up your document into paragraphs and mean pooling (taking the average of) the resulting vectors.

NOTE: There are many pre-trained BERT-based models that you can use for keyword extraction. However, I would advise you to use either distilbert — base-nli-stsb-mean-tokens or xlm-r-distilroberta-base-paraphase-v1 as they have shown great performance in semantic similarity and paraphrase identification respectively.

4. Cosine Similarity

In the final step, we want to find the candidates that are most similar to the document. We assume that the most similar candidates to the document are good keywords/keyphrases for representing the document.

To calculate the similarity between candidates and the document, we will be using the cosine similarity between vectors as it performs quite well in high-dimensionality:

from sklearn.metrics.pairwise import cosine_similarity

top_n = 5

distances = cosine_similarity(doc_embedding, candidate_embeddings)

keywords = [candidates[index] for index in distances.argsort()[0][-top_n:]]

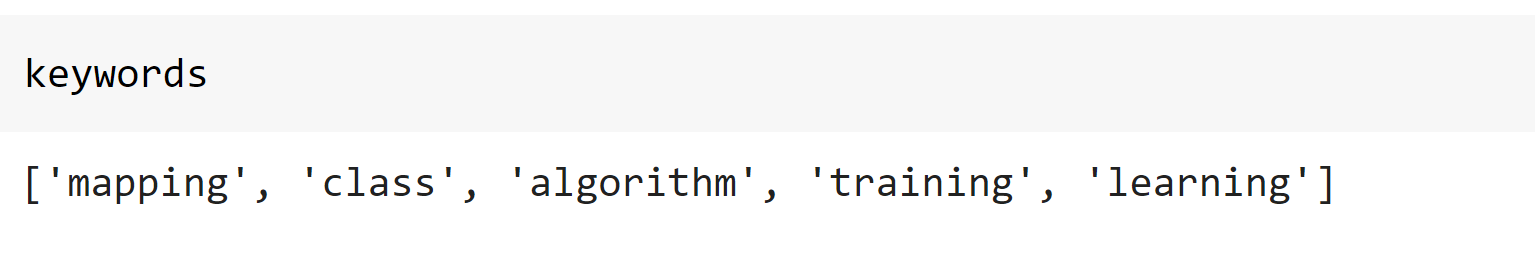

And…that is it! We take the top 5 most similar candidates to the input document as the resulting keywords:

The results look great! These terms definitely look like they describe a document about supervised machine learning.

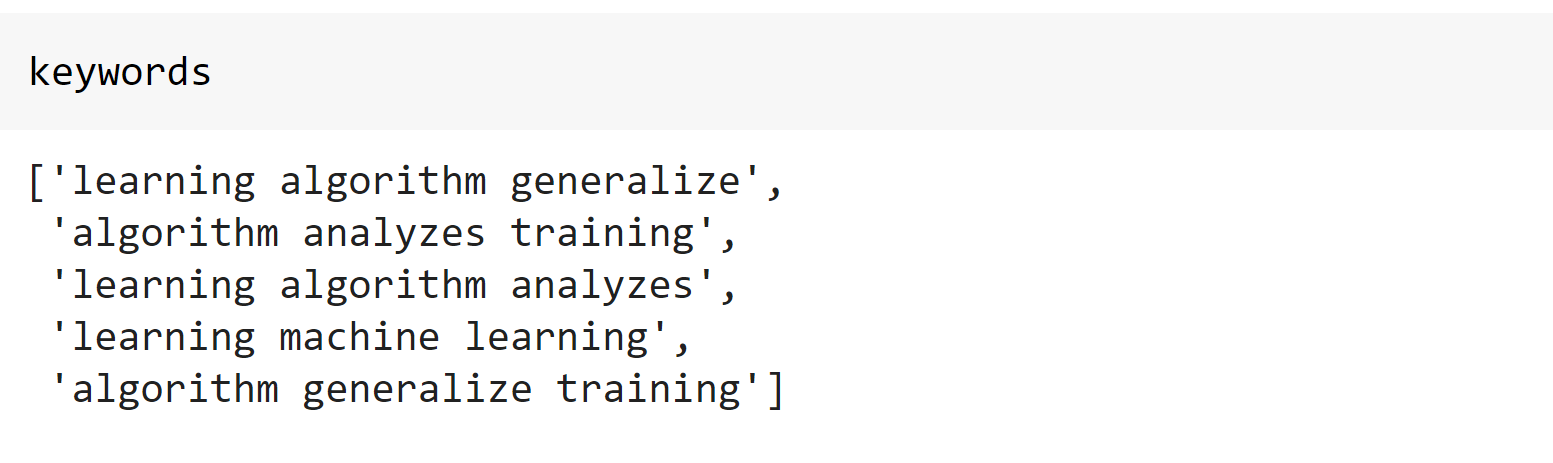

Now, let us take a look at what happens if we change the n_gram_range to (3,3):

It seems that we get keyphrases instead of keywords now! These keyphrases, by themselves, seem to nicely represent the document. However, I am not happy that all keyphrases are so similar to each other.

To solve this issue, let us take a look at the diversification of our results.

5. Diversification

There is a reason why similar results are returned… they best represent the document! If we were to diversify the keywords/keyphrases then they are less likely to represent the document well as a collective.

Thus, the diversification of our results requires a delicate balance between the accuracy of keywords/keyphrases and the diversity between them.

There are two algorithms that we will be using to diversify our results:

-

Max Sum Similarity

-

Maximal Marginal Relevance

Max Sum Similarity

The maximum sum distance between pairs of data is defined as the pairs of data for which the distance between them is maximized. In our case, we want to maximize the candidate similarity to the document whilst minimizing the similarity between candidates.

To do this, we select the top 20 keywords/keyphrases, and from those 20, select the 5 that are the least similar to each other:

import numpy as np

import itertools

def max_sum_sim(doc_embedding, word_embeddings, words, top_n, nr_candidates):

# Calculate distances and extract keywords

distances = cosine_similarity(doc_embedding, candidate_embeddings)

distances_candidates = cosine_similarity(candidate_embeddings,

candidate_embeddings)

# Get top_n words as candidates based on cosine similarity

words_idx = list(distances.argsort()[0][-nr_candidates:])

words_vals = [candidates[index] for index in words_idx]

distances_candidates = distances_candidates[np.ix_(words_idx, words_idx)]

# Calculate the combination of words that are the least similar to each other

min_sim = np.inf

candidate = None

for combination in itertools.combinations(range(len(words_idx)), top_n):

sim = sum([distances_candidates[i][j] for i in combination for j in combination if i != j])

if sim < min_sim:

candidate = combination

min_sim = sim

return [words_vals[idx] for idx in candidate]

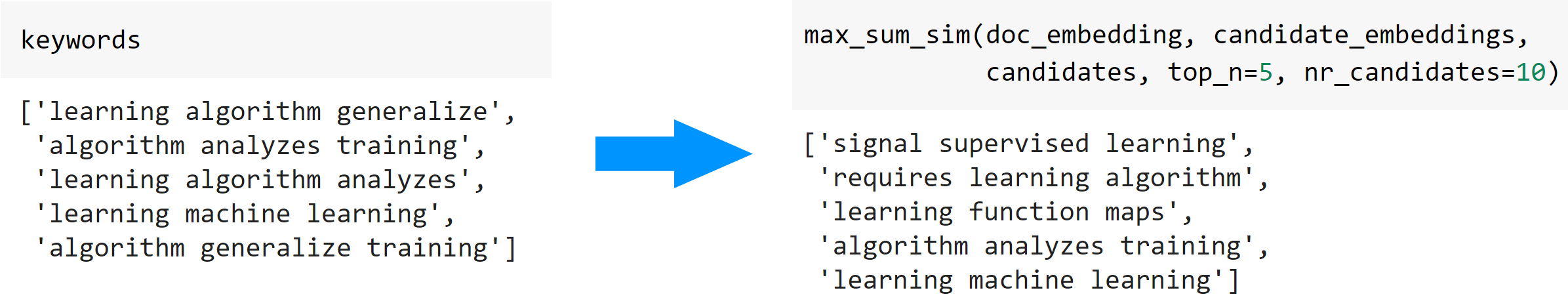

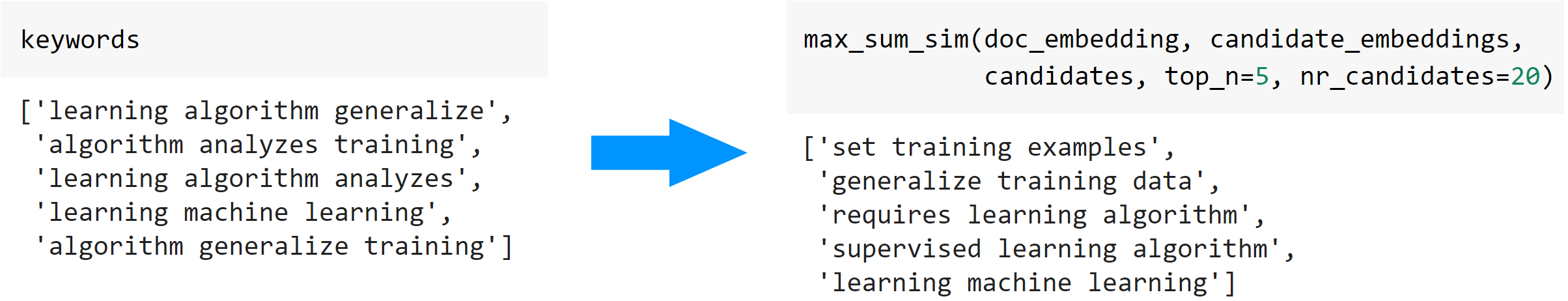

If we set a low nr_candidates, then our results seem to be very similar to our original cosine similarity method:

However, a relatively high nr_candidates will create more diverse keyphrases:

As mentioned before, there is a tradeoff between accuracy and diversity that you must keep in mind. If you increase the nr_candidates, then there is a good chance you get very diverse keywords but that are not very good representations of the document.

I would advise you to keep nr_candidates less than 20% of the total number of unique words in your document.

Maximal Marginal Relevance

The final method for diversifying our results is Maximal Marginal Relevance (MMR). MMR tries to minimize redundancy and maximize the diversity of results in text summarization tasks. Fortunately, a keyword extraction algorithm called EmbedRank has implemented a version of MMR that allows us to use it for diversifying our keywords/keyphrases.

We start by selecting the keyword/keyphrase that is the most similar to the document. Then, we iteratively select new candidates that are both similar to the document and not similar to the already selected keywords/keyphrases:

import numpy as np

def mmr(doc_embedding, word_embeddings, words, top_n, diversity):

# Extract similarity within words, and between words and the document

word_doc_similarity = cosine_similarity(word_embeddings, doc_embedding)

word_similarity = cosine_similarity(word_embeddings)

# Initialize candidates and already choose best keyword/keyphras

keywords_idx = [np.argmax(word_doc_similarity)]

candidates_idx = [i for i in range(len(words)) if i != keywords_idx[0]]

for _ in range(top_n - 1):

# Extract similarities within candidates and

# between candidates and selected keywords/phrases

candidate_similarities = word_doc_similarity[candidates_idx, :]

target_similarities = np.max(word_similarity[candidates_idx][:, keywords_idx], axis=1)

# Calculate MMR

mmr = (1-diversity) * candidate_similarities - diversity * target_similarities.reshape(-1, 1)

mmr_idx = candidates_idx[np.argmax(mmr)]

# Update keywords & candidates

keywords_idx.append(mmr_idx)

candidates_idx.remove(mmr_idx)

return [words[idx] for idx in keywords_idx]

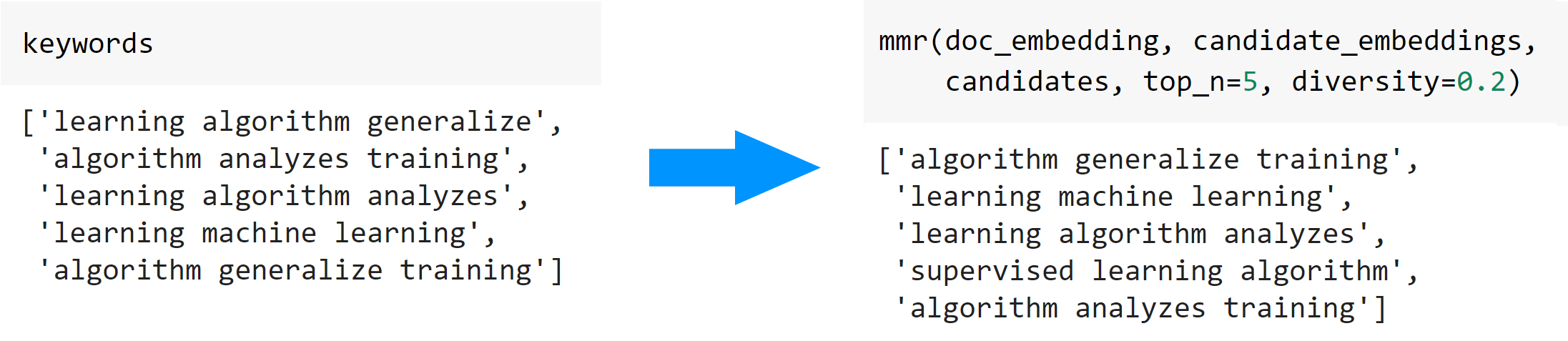

If we set a relatively low diversity, then our results seem to be very similar to our original cosine similarity method:

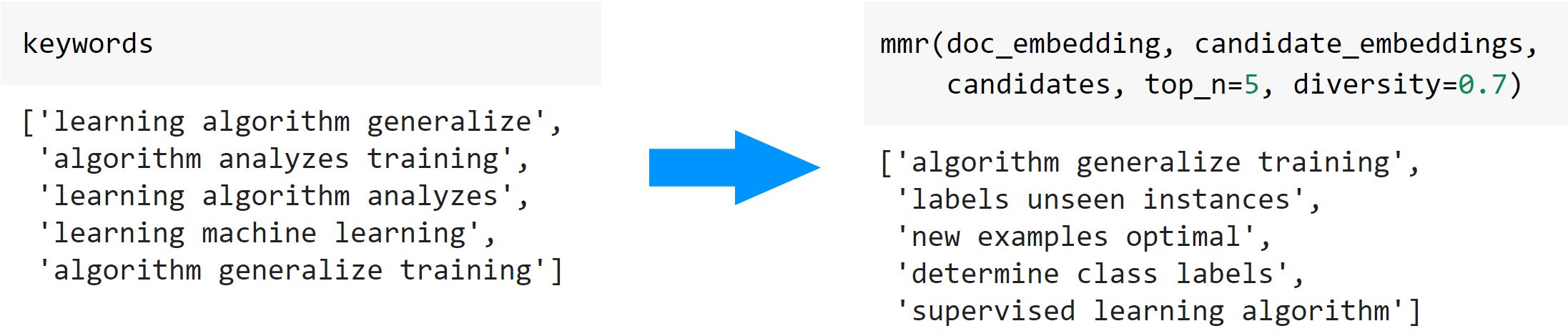

However, a relatively high diversity score will create very diverse keyphrases:

Thank you for reading!

If you are, like me, passionate about AI, Data Science, or Psychology, please feel free to add me on LinkedIn or follow me on Twitter.

All examples and code in this article can be found here.